Part 3: Research Funding Inequality

The NIH was allocated $41.6 billion in 2020, about $30 billion of which was awarded to 56,000 grants. The NIH’s grant distribution approach has generally been criticized within academic articles because of its unequal distribution. Inequality in grant distribution has been on the rise since 1985, according to a 2017 article used as a citation throughout this section, and continues to rise to new extremes.35 I’ll tackle a few of the biggest examples of funding inequality and then discuss whether the current distribution is fair and/or efficient.

Researchers

In 2017, 1% of NIH extramural grantees received 11% of the total funds. The top 10% of researchers received over 40% of the funds. 36 37 This is a larger divide in wealth inequality than for the general U.S. population, where the top 10% of earners received 40% of (post-tax) income.

The proximate mechanisms of this imbalance include established researchers getting larger grants, getting more simultaneous grants, and the higher likelihood of renewing grants compared to attaining a grant for the first time. However, many researchers worry that the current structure permits a snowballing effect, where established researchers amass too many grants that pull finite money from the common grant fund at the expense of newer researchers.

Institutions

The NIH gives about 50% of all extramural grant money to 2% of applying organizations, most of which are universities or research facilities attached to universities. 38 The top 10 NIH recipients (out of 2,632 institutions) 39 received $6.5 billion in 2020. This is 22% of the NIH’s total, extramural grant budget ($29.5 billion40), and 16% of the NIH’s entire budget.

In 2020, the top ten largest recipients of NIH money were: 41

- Johns Hopkins University - $807 million through 1,452 awards

- Fred Hutchinson Cancer Research Center - $758 million through 301 awards

- University of California San Francisco - $686 million through 1,388 awards

- University of California Los Angeles - $673 million through 884 awards

- University of Michigan Ann Arbor - $642 million through 1,326 awards

- Duke University - $607 million through 931 awards

- University of Pennsylvania - $594 million through 1,267 awards

- University of Pittsburgh at Pittsburgh - $570 million through 1,158 awards

- Stanford University - $561 million through 1,084 awards

- Columbia University Health Sciences - $559 million through 1,003 awards

Add up the top 30 recipients, and the sum is $14 billion. That’s 44% of the NIH’s extramural budget, and 36% of the NIH’s total budget.

These figures are even higher if you count university windfalls across multiple institutions. For instance, Harvard University is connected to Massachusetts General Hospital, Boston Children’s Hospital, and the Broad Institute of MIT and Harvard, the 12th, 42nd and 45th largest recipients of NIH funds.

The top institutional beneficiaries of NIH funding have also remained remarkably consistent over time. Of the top 50 beneficiaries in 1992, 44 remained in the top 50 in 2003, and 40 remained in 2020. 39 Of the top 15 beneficiaries in 1992, 14 remained in the top 15 in 2003, and 13 remained in 2020.

On the other hand, most NIH institutional beneficiaries get very little funding. Out of the 2,632 beneficiaries in 2020, 1,055 (40%) received less than $1 million.

Geography

The geographic distribution of extramural grants almost entirely stems from the distribution of universities throughout the country. The NIH gives about 50% of extramural grant money to just five states:42 California, New York, Massachusetts, Maryland, and North Carolina.

The five states with the least funding are, in order from least to most: Wyoming, Alaska, Idaho, South Dakota, and North Dakota. From the NIH’s most recent data, California currently receives $6.7 billion in active grants. Wyoming, $16.5 million.43

Gini Coefficients

Though grant applicants (supposedly) don’t know who their primary reviewers are, reviewers know whose grants they are evaluating. Thus, it’s possible that grant applicants from elite research institutions get a passive evaluation boost by sheer association and prestige, though this boost could be interpreted rationally (the best researchers are probably at the best universities) or irrationally (arbitrary prejudgment). 44 Small experiments in which reviewers are blinded to race, for example, show unbalanced effects on overall grant scores, and reviewers are often still able to correctly guess who wrote the grant. 45

The Gini coefficient is the standard economic metric used for calculating income inequality. Countries with less inequality, like Iceland and Slovakia, have lower Gini coefficients (typically in the 0.25-0.30 range). Highly unequal countries, like South Africa and Brazil, are in the 0.50-0.60+ range. The United States has a Gini coefficient of 0.41 and the United Kingdom, 0.35.

In 2020, the Gini coefficient for the NIH’s extramural institutional recipients was 0.47. If that were a country, it would be the 23rd most unequal in the world, just ahead of Venezuela. 46

Did the Boom Decade cause a concentration of funding among top NIH institutional recipients?

Between 1993 and 2003, the NIH’s budget increased by 164%, rising from $10.3 to $27.2 billion.7 This marked shift slightly decreased funding inequality, as measured by Gini scores.

During that ten year period, earnings for the top 50 NIH recipients increased from $4.3 billion to $7.5 billion. Meanwhile, the share of extramural funding (excluding contracts) earned by the top 50 NIH recipients decreased from 59% to 55%, and the number of institutional recipients increased from 1,653 to 2,350, or 42%. The Gini coefficient slightly decreased, from 0.51 to 0.49.

Out of curiosity, I checked how the numbers on the lowest-earning NIH institutional recipients and how they fared over the Boom Decade. I picked $1 million in 2003 as a fairly arbitrary cut off point; it represents two to four R01 grants.

Of the 2,755 institutional recipients in 2003, 2,017 (73%) earned less than $1 million. In 1992, out of 1,653 recipient institutions, 1,176 (71%) earned less than $762,500 (=$1 million 2003 USD).

In other words, the distribution of NIH funds during the Boom Decade was remarkably consistent. And that fund distribution trend line remained remarkably consistent for the two decades after the Boom Decade. The percentage of extramural funding received by the top 50 beneficiaries:

1992 – 59%

2000 – 56%

2003 – 55%

2010 – 56%

2020 – 57%

Grant distributions are determined by thousands of scientists across hundreds of study sections and specialized domains. It’s a mystery, then, how all these people, with seemingly no coordination, distributed funds in almost exactly the same pattern over thirty years.

The Case for Current Efficiency

Whether the current, lopsided distribution of extramural grants is efficiency-promoting or dragging seems to be one of the most contentious issues in the NIH.

Defenders of the status quo have a simple but valid argument: The best researchers tend to congregate at the best institutions, they say, and so it’s reasonable that Johns Hopkins, UPenn, the top UCs, Yale, Harvard, and so forth would have the best labs, the best equipment, the best faculty, and therefore would earn the most grant money. In turn, the NIH should be funneling a highly disproportionate amount of money to these institutions for the sake of efficiency.

“the NIH should be funneling a highly disproportionate amount of money to these institutions for the sake of efficiency”

One of the major reasons some individuals accumulate lots of grant money is that they need multiple concurrent, consecutive grants to finance expensive research. There is indeed a snowballing effect, but perhaps it is more efficient to pile a relatively large amount of money on a relatively small number of researchers, rather than leave top-level researchers with fewer grants and less money for the sake of distributing funds to marginally worse researchers.

It’s not necessarily true, though, that the best researchers work at the highest-ranked schools. Studies show 47 that papers authored by researchers at a top school, like Harvard or Stanford, are more likely to receive a higher citation count, regardless of the quality of the work. It’s difficult to measure truly transformative research impacts.

Other studies indicate that the marginal value of NIH funding declines past a point that isn’t far from the median grant (I detail them in the next section), and thus status quo critics endorse spending caps. But these studies are simplifying complex outcomes.

Research impact often isn’t felt for many years after projects are completed, and it can’t be easily captured with blunt proxies like publications and citations. Caps might pull money away from some high-spending, low-efficiency researchers, but they will also defund the best and brightest at the NIH who have earned their huge grants by proving their ability.

Some interviewees claim that the NIH has already sacrificed efficiency for the sake of grant distribution. In particular, some said that the NIH is biased in favor of giving grants to institutions based in low population states, likely due to political concerns, or what one interviewee called an “egalitarian impulse.” If that’s the case, then critics have a legitimate qualm that the NIH is sacrificing some degree of efficiency by rewarding inferior researchers for political purposes.

The Case Against Current Efficiency

All of the above may be true, and yet grants are currently too lopsided in their distribution to optimize efficiency. I personally lean toward this opinion, albeit with a low confidence interval.

All of the above may be true, and yet grants are currently too lopsided in their distribution to optimize efficiency.

There are numerous studies that attempt to determine the marginal value of grants at different funding levels, but they all rely on citations and publications as metrics, which I think don’t work as proxies for quality. Not only are citations and publications highly variable in their quality, but larger, more established labs and researchers may very well publish less often and get fewer citations because they aren’t as bound to the “publish or perish” mindset of much of academia.

Nevertheless, I think there is likely somewhat of a detrimental bias in favor of top universities and established researchers simply due to the incentives at play. The entrenched interests are heavily dependent upon the NIH and have the means to influence its grant distribution process, both through official channels (as will be explored in Part 7) and through passive cultural norms which reinforce the superiority of established labs and researchers. But again, there is no strong empirical evidence to support this claim.

Proposed Reforms to Funding Inequalities

There have been calls for the NIH to cap funding per researcher since at least 1985.48 In 2017, University of North Carolina professor, Mark Peifer, publicly called on the NIH to impose a cap on funding per grant recipient. His paper suggests a $1 million cap as a soft target, which would still permit many large grant recipients, but prohibit significant, low-margin spending.49 The NIH’s grant database identifies only 542 grants made in 2020 with sizes between $1 and 10 million.50 A separate analysis of 2015 data placed a theoretical, $800,000 cap on individual researchers; that strategy would have freed up $4.22 billion (after bumping all <$200,000 grants up to $200,000) to be distributed to other researchers for higher yield projects. If the new researchers were each given awards of $400,000, then 10,542 new researchers could have been funded, constituting a 20% increase in total grants.

Even if the base data are valid, I assume there are highly talented researchers who can use higher dollar amounts effectively. Likewise, I’m sure there are projects which would benefit from a grant of, say, $450,000 rather than $400,000. Perhaps strict caps risk squeezing researchers into needless restraints, which could hurt research for the sake of meager savings.

The Failure of the Grant Support Index

Perhaps the most blatant example of a failed reform effort at the NIH is the aborted Grant Support Index (GSI).

In May 2017, the NIH announced the implementation of the GSI to limit grants to top researchers so that funds could be distributed more widely. The idea was simple: assign points to researchers based not only on the size of their grants, but also on their specific field, thereby accounting for variability in funds required for different types of research. Then, there would be a point cap (roughly equivalent to three concurrent R01 grants) which, if surpassed, would require the applicant to jump through extra hoops in future grant application processes and face lower odds of acceptance. It was by no means a draconian limit, as it would only impact 6% of NIH-funded researchers. But it was a concrete step in limiting funding concentration.51

The plan “caused an uproar among many scientists,” according to Science. Some researchers expressed legitimate grievances, like how the GSI would discourage lab collaboration, while others almost certainly just feared they would lose out on NIH dollars. Under pressure, the NIH relaxed the point requirement so that it would only impact an estimated 3% of NIH grant recipients.52

Just over one month after its introduction, the NIH abandoned the GSI entirely.

According to one interviewee, who used to hold a high-ranking advisory position in the NIH, pressure from top researchers and labs pushed then-director Collins to end the GSI. It’s impossible to prove this, but a 2017 paper corroborates the idea: 53

“Not too surprisingly, there was pushback [against the GSI], the most strident and well-publicized of which seemed to be from a small number of very well-funded scientists who seem unwilling to relinquish their hold on a disproportionate amount of NIH funds. Some of their rhetoric was heated—one was quoted in the Boston Globe as saying, ‘If you have a sports team, you want Tom Brady on the field every time. You don’t want the second string or the third string.'” 54

It seems that well-funded and powerful scientists, threatened by this new approach, tipped the balance. The Advisory Committee that made the decision to reverse the GSI did not represent the diversity of career stages affected by this critical decision, according to one interviewee.

“The reversal of the GSI policy sent a demoralizing message to many of us,” Peifer wrote. 53 “I think if you ask your junior colleagues, whose voices were largely not taken into account in this discussion, you’ll find that the vast majority of them support some sort of funding limitations. My recent conversations with colleagues suggest a significant number of senior scientists also share these concerns. The almost 1500 people who have already signed a petition to NIH Director Francis Collins to reinstate a funding cap provide an indication of the breadth of this opinion.”

Researcher Age

The age of NIH-funded researchers has been a contentious issue for decades and is arguably one of the most apt examples of its institutional conservatism. Inequities in the age of NIH grant recipients may hinder the careers of young scientists and scientific progress as a whole.

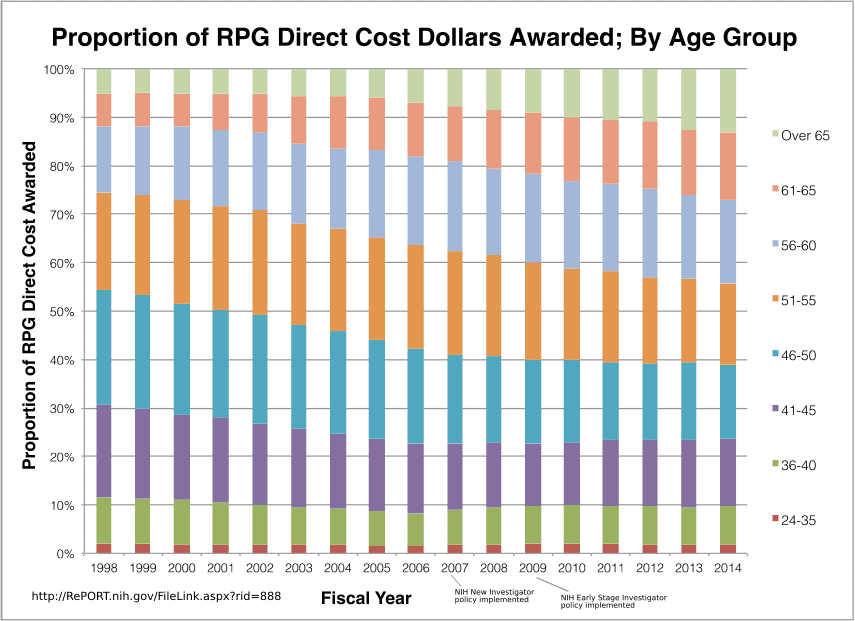

From 1995 to 2014, R01 grantees over age 55 garnered an increase in grants of $2.5 billion, while grantees under 56 only yielded a $350 million increase. In 2014, 5% of grantees were over 71, compared to 1% in 1995.

RPG = Research Project Grants. Source.

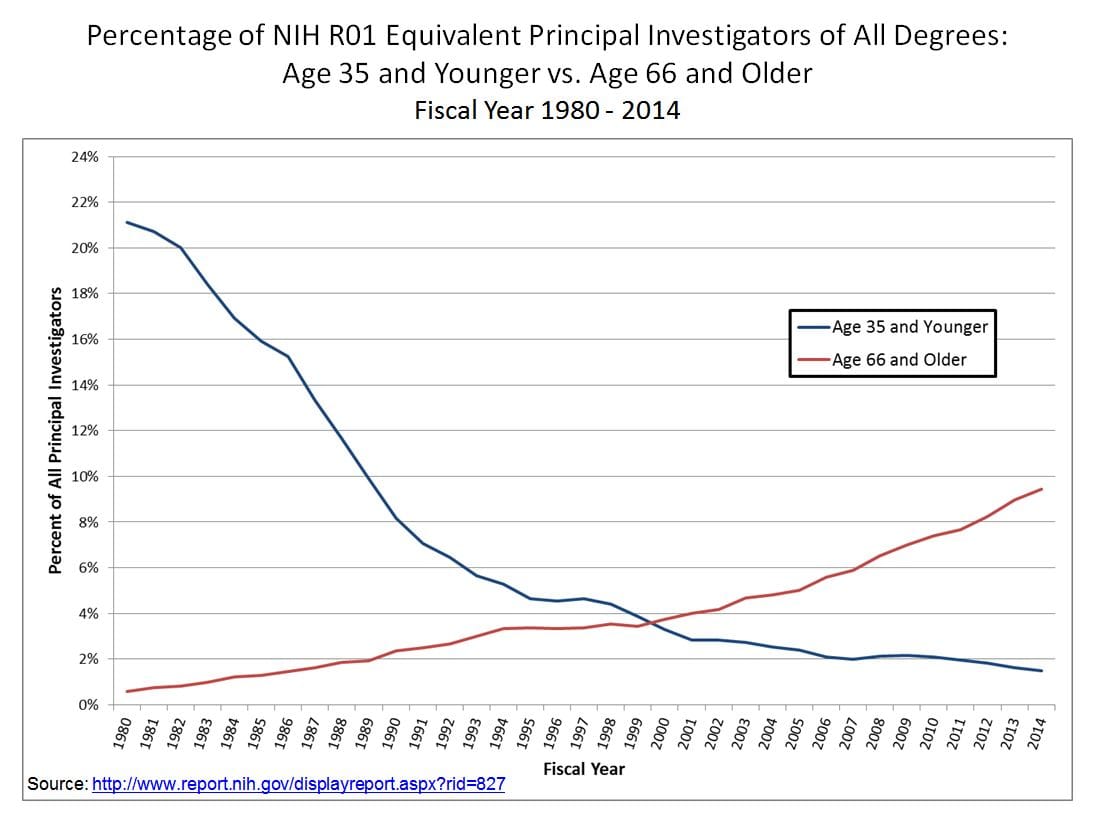

Another way to consider this gap is to simply plot the total share of R01 grants awarded to those under 35, compared to those aged over 65. The shift since 1980 is striking.

This age gap is perhaps most acutely felt among young researchers trying to access NIH funding for the first time. In 2020, the average age of a first-time R01 grant recipient was 44, up from 40 in 1995, and 34.3 in 1970.55 It is quite rare these days for researchers to get funded in their early 30s, and nearly unheard of to get funded in their 20s.

A 2017 paper56 found that NIH grantees are aging across the board. This demographic trend is most strongly felt in basic research: Since 1980, application rates for basic science-oriented grants have steadily fallen for researchers under 46 (almost a 40% drop between 1992 and 2014 alone), and steadily rose for researchers over 55.

Older Researchers Get Grants More Easily Than Younger Researchers

The NIH does not provide data on grant approval rates by age; only by broad categories. But we can extrapolate researcher age from these categories.

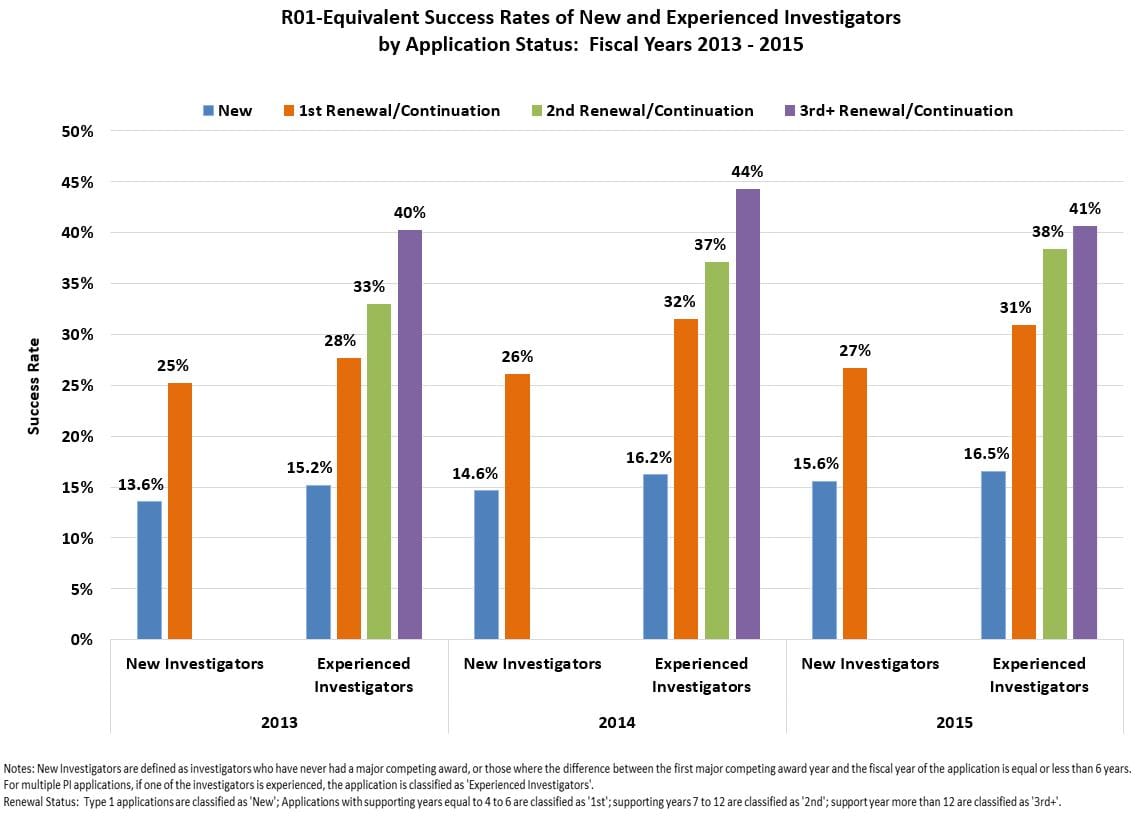

The NIH classifies “New” researchers as those who have yet to receive a standard NIH grant, like an R01. So new researchers can be anywhere from their late 20s to early 40s. In 2020, new researchers had a funding rate of 17.8%.

The “early stage investigators” (ESI) category is a subsection of new investigators who have completed a terminal degree within the past ten years. ESIs exclude graduate students and postdoctoral fellows, and are therefore on the older end of new investigators. In 2020, the funding rate for ESIs was 27.7%.

“Established” researchers have already received NIH grants. In 2020, the funding rate for “established” researchers was 32.9%.

Two researchers who I spoke with identified a phenomenon called the “valley of death.” According to them, NIH measures to boost grant acceptance for younger researchers have been reasonably effective, and well-established researchers still have their traditional advantages. But mid-career researchers are stuck in a “valley,” often struggling to get more grants to make the transition to the highest end of researcher establishment.

Why Are These Trends Happening?

Extramural grants are distributed by study sections, so NIH executives don’t have a great deal of direct control over who gets them. That makes the age issue all the more puzzling. Still, the NIH grant recipient demographic trends have been remarkably consistent.

There are six possible explanations.

First, the U.S. population is inherently getting older, on average. In 1970, 10% of Americans were 65 or older; in 1990, 12.5%; in 2010, 13%; in 2020, almost 17%.

Second, a major policy change is likely playing a role in the aging of American bioscience. In 1994, Congress amended the Age Discrimination in Employment Act to strike one of its few remaining age discrimination exemptions. 57 As a result, universities were prohibited from forcing faculty to retire at age 70. A 2021 study describes “dramatic effects” on the academic job market. 58 From 1971 to 1993, 1% of US faculty were over 70. From 1994 onward, 14% of faculty were over 70.

Third, as indicated by a few interviewees, postdocs tend to take longer to complete their work and advance to staff positions. Thus, there are fewer young researchers with the independence to apply for NIH grants.59

Fourth, older researchers have more existing grants than younger researchers, and it’s significantly easier to get grants renewed, rather than win first-time grants. 60

Fifth, there is a strong consensus that NIH grant applicants have become increasingly burdened by complex, arcane, bureaucratic rules (more on this in Part 4). Thus, established researchers with dedicated grant writing teams will have a systematic advantage over new researchers who have to learn all of these rules on their own.

Sixth, according to some papers61 and interviewees, study sections are biased against young researchers, perhaps because primary reviewers know who the grant applicants are, where they are in their careers, and thus may perceive older researchers as more competent.

Is The Current Preference For Older Researchers a Bad Thing For Science?

Twenty-somethings, in our society, can found multi-billion dollar tech companies, yet vanishingly few researchers under 30 ever receive funding from the NIH. Albert Einstein made his greatest breakthroughs in his late 20s; researchers with similar potential, today, are often confined to multi-year postdoctoral fellowships, but could have their own labs.

Evidence suggests that, in many fields, people do their best work in their 20s and 30s. This seems to particularly be the case in domains like physics, mathematics and chess, where there is a heavy dependence on fluid intelligence, which often peaks in an individual’s 20s.

Other domains, including bioscience research, also require the absorption of a massive corpus of work before any meaningful contributions can be made. Hence, perhaps great bioscientists tend to be older because they need time to not only amass all this knowledge, but integrate it in a manner that reveals potential breakthroughs. Great biologists and chemists have, anecdotally, tended to be older: Darwin, Mendeleev, Heisenberg, Pasteur. There are obvious exceptions: Watson and Crick were 25 and 37 when they discovered the helix structure of DNA. Rosalind Franklin was in her early 30s when she took Photo 51, the x-ray image that ultimately helped unravel DNA’s structure.

Funding more young researchers would likely result in more high-risk/high-reward projects, albeit with a higher failure rate.

Many researchers have fought against the NIH demographic trends out of reasonable career concerns. An NIH grant is nearly mandatory for a successful bioscience research career, so if fewer young researchers are getting them, then fewer careers are being launched. If this bottleneck is being instigated by purposeful or accidental biases that favor the status quo of established scientists, then young researchers are being marginalized by an unfair system.

On efficiency grounds, there are also concerns that the lack of young researcher funding might be stifling the next generation of talent. As more grants drift to older researchers, there are fewer opportunities for young researchers to advance in their fields, especially as older researchers continue to have longer careers.

Beyond raw research efficiency, one interviewee framed the issue as a matter of “passing the baton.” Researchers who continue their work into their 70s and beyond consume grants and resources which could start the careers of younger researchers. According to this interviewee, European governments rarely give grants to individuals (in any domain) over 65 for this reason.

Attempted, But Failed, Solutions

The NIH has taken measures to combat shifting demographics in grant recipients. Many have failed.

The NIH’s biggest campaign to address the age issue is their Early Stage Investigator (ESI) policy. Starting in 2009, and then overhauled in 2017 as the Next Generation Researchers Initiative, the program gave early researchers (defined as being within a decade of completing a terminal degree or medical school and having not received an NIH grant) an explicit advantage in grant evaluations.

“Applications from ESIs will be given special consideration during peer review and at the time of funding,” according to an NIH announcement. “Peer reviewers will be instructed to focus more on the proposed approach than on the track record, and to expect less preliminary data than would be provided by an established investigator."62

More concretely, ESIs get a bump on the NIH “payline.” When NIH extramural grants are submitted, they are scored and arranged by percentile; a specific percentile, such as 20%, is chosen as the payline. All applications below that payline are awarded. ESI policy varies by institute, but they tend to get a payline bump of 4 to 5%. So if the payline is 20%, then an ESI applicant may only have to be ranked in the top 25% of applications to get approval. 63

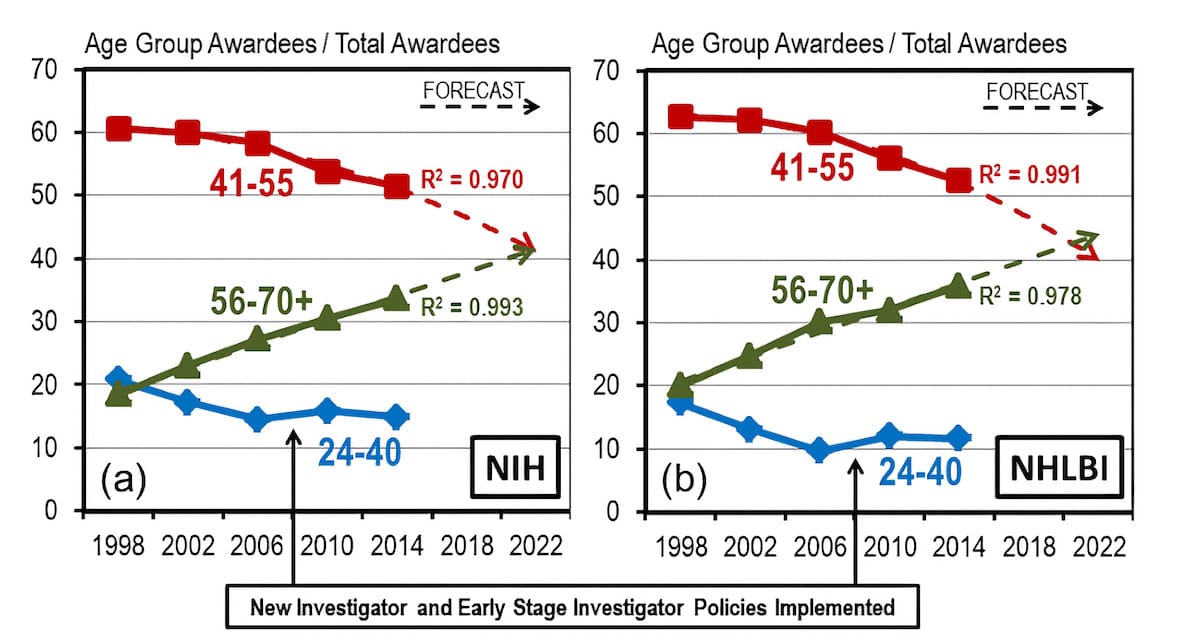

So how effective was the ESI program? Consider this graph:

The trend lines indicate that the share of grant money going to young researchers stopped declining shortly before ESI began for some reason. After ESI was implemented, the share increased slightly and then resumed its decline, albeit at a slower pace than before. It was clear that ESI was not creating a significant reversal in the trend as hoped, so many researchers continued to pressure the NIH for additional reforms.

In 2017, the NIH overhauled ESI with the Next Generation Researchers Initiative. Since then, the number of ESI researchers funded increased from 978 in 2016 to 1,412 in 2020, while their grant funding rate (for R01s and equivalents, the standard NIH grants) rose from 23.6% to 27.7%. So there seemed to be some progress, but…

Over the same time frame, the funding rate for researchers categorized by the NIH as “established” rose from 28.6% to 32.9%. And the funding rate for “new” investigators (at least one year of faculty experience), rose from 16.1% to 17.8%.64

So the ESI rate rose by 4.1%, the established rate rose by 4.3%, and the new investigator rate rose by 1.7%. Meanwhile, the NIH’s budget rose from $31.3 billion to $40.3 billion, or 29%.

Other initiatives, such as the Early Reviewer Career (ERC) program, have been more successful. One of the reasons researchers choose to serve on study sections is that it gives them first-hand insight into how to play the application game. But study sections are composed, largely, from researchers who have already received NIH grants. ERC invites new researchers (with at least one year of faculty employment, some publications, and no NIH grants), to sit in on study sections and learn how the review process works.65

Still, it’s surprising that ESI did not balance the scales for younger, NIH applicants.

Failed Reforms and Brute Force Solutions

Given the NIH leadership’s indirect control over the grant distribution process, many factors could have played into the continued concentration of grants among top researchers over the past five years. But there’s not an easy answer.

The ESI and the Next Generation Researchers Initiative were likely insufficient restructurings of the NIH’s extramural process, according to sources interviewed for this report. Francis Collins and the NIH’s leadership could have pushed harder, but they may have been discouraged from doing so by older researchers.

A typical researcher has to attend college for undergraduate studies, then a PhD or medical school, followed by a postdoctoral fellowship – often, a grueling 10 or more years of schooling. After all that, most researchers would be lucky to become a junior faculty member at a university or a low-level researcher at a private company, where they’ll have to work under established researchers for probably another six or more years before getting a decent shot at an independent research lab.

For individuals in the middle of that system, it can seem unfair, even guild-like. I’m sure there are many brilliant postdoctoral researchers, or even PhD students, with exciting research ideas who have almost no chance of getting direct NIH funding, let alone control of their own labs. But for individuals who have already gone through the system and come out the other side, its many tiers act as a barrier to entry. Their prestige and salary are in large part built on going through that gauntlet. If a brilliant, 29 year-old PhD student could snag an NIH grant, he would be jumping the queue and undermining the career value of decades of education and training.

Thus, established scientists have a strong incentive to maintain a high concentration of grants for later-career researchers, and to use the standard track researcher system as a credential base for filtering out grant applicants.

As I discuss in Parts 2 and 7, the NIH’s leadership is under pressure from its beneficiaries to maintain the status quo. Therefore, I believe it’s plausible that concerted pushback by older researchers has hindered reform.

Reversing the Demographic Trend

So, if the ESI and Next Generation Researchers Initiative isn’t helping young researchers enough, what can the NIH do to reverse the demographic trend?

The most direct method is to establish a new grant program with age or credential caps. If the NIH wanted to get really aggressive, it could produce more grants specifically for graduate students and postdoctoral fellows.

One interviewee pointed out that dumping more money into postdoctoral researchers is great policy from an efficiency perspective, because postdocs are cheap. Their salaries typically top-out around $70K, which is almost a third of what PIs make on NIH grants. The NIH could directly fund some of the most ambitious postdoctoral fellows at a low cost, rather than rely on normal grants trickling down to them. Fully-funded postdoctoral fellows could then contribute their skills to other labs, or even break away and start some of their own work.

While this would undoubtedly bring money to younger researchers, I’m unsure of the secondary effects. For instance, would major universities throw finite research space and funds behind younger researchers? How would older researchers feel about their brightest assistants abandoning them for their own work, before climbing the requisite academic ladder? It’s hard to say, but more ambitious efforts to fund young researchers could be transformative for biomedical research as a whole.